San Francisco: Apple is working on the most advanced chips for its unannounced AR/VR headset and now a new report has claimed that the headset would come with multiple highly sensitive 3D sensing modules in order to offer innovative hand tracking.

According to Ming-chi Kuo, the structured light sensors can detect objects in the hands, comparable to how Face ID is able to figure out facial expressions to generate Animoji, reports The Verge.

“Capturing the details of hand movement can provide a more intuitive and vivid human-machine UI,” Kuo writes. He also believes the sensors will be able to detect objects from up to 200 per cent further away than the iPhone’s Face ID.

The headset will focus on gaming, media consumption, and communication.

It will have two processors, one with the same level of computing power as M1 along with a lower-end chip to handle input from different sensors.

The headset may come with at least six-eight optical modules to simultaneously provide continuous video see-through AR services. The device is also said to have two 4K OLED microdisplay from Sony.

The upcoming Apple headset will be similar to the Oculus Quest, and some prototypes being tested include external cameras to enable some AR features.

It may feature at least 15 camera modules, eye-tracking, possibly iris recognition, and could cost between $2,000 and $3,000.

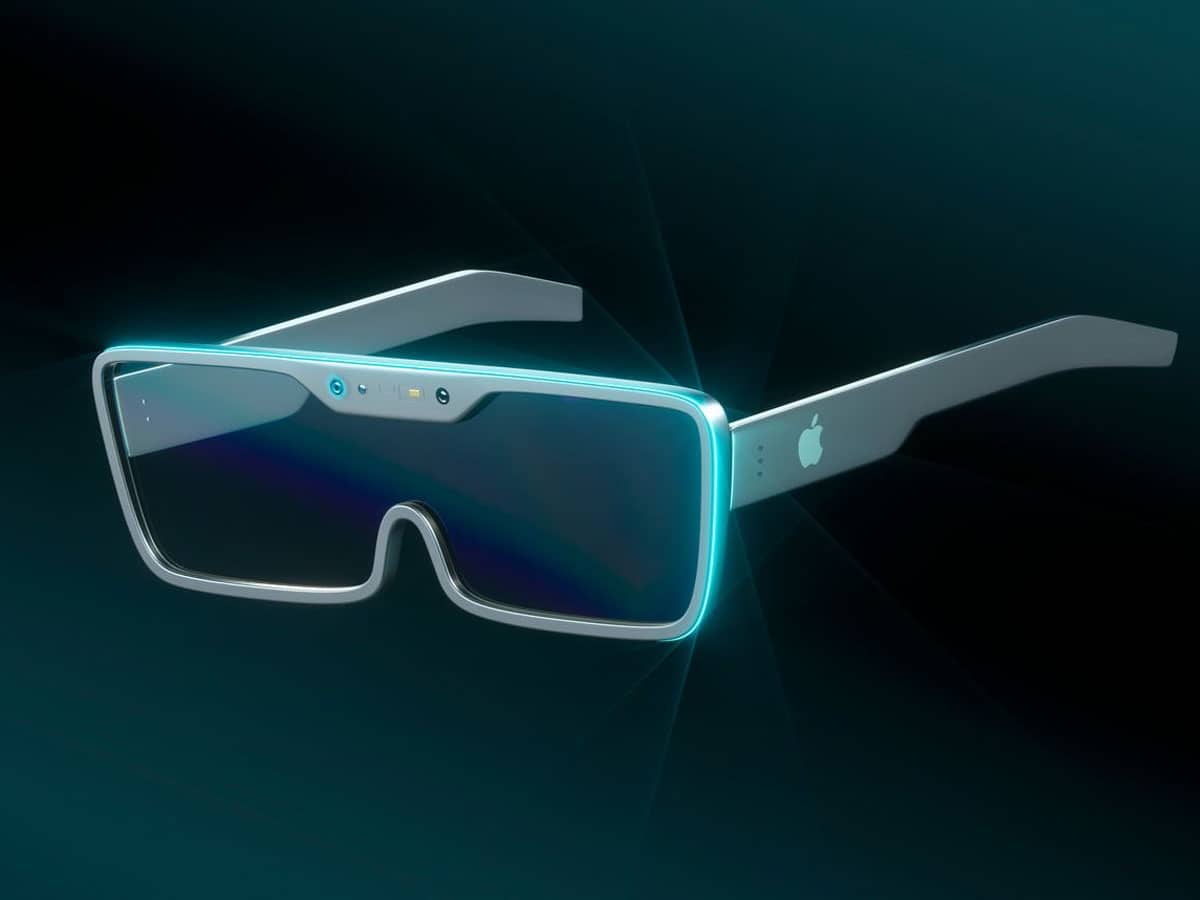

The AR headset is expected to sport a sleek design so that it is lightweight and comfortable for the wearer to roam around for prolonged periods.

It is also expected that the device will sport a high-resolution display, allowing users to read small bits of text while seeing other people in front of them at the same time.