New Delhi: As you write poems, essays or computer programmes via Artificial Intelligence (AI) chatbot called ‘ChatGPT’ created by for-profit research lab called OpenAI, cyber-security researchers on Tuesday warned of hackers potentially using the AI chatbot and Codex to execute targeted and efficient cyber-attacks.

Check Point Research (CPR) used ChatGPT and Codex (OpenAI’s another AI-based system that translates natural language to code), to create malicious phishing emails and code, in order to warn of the potential dangers that the new AI technology can have on the cyber threat landscape.

The CPR team used ‘ChatGPT’ to produce malicious emails, code and a full infection chain capable of targeting people’s computers.

The team chatted with ChatGPT to refine a phishing email to make infection chain easier.

“Using Open AI’s ChatGPT, CPR was able to create a phishing email, with an attached Excel document containing malicious code capable of downloading reverse shells,” the researchers noted.

Reverse shell attacks aim to connect to a remote computer and redirect the input and output connections of the target system’s shell so the attacker can access it remotely.

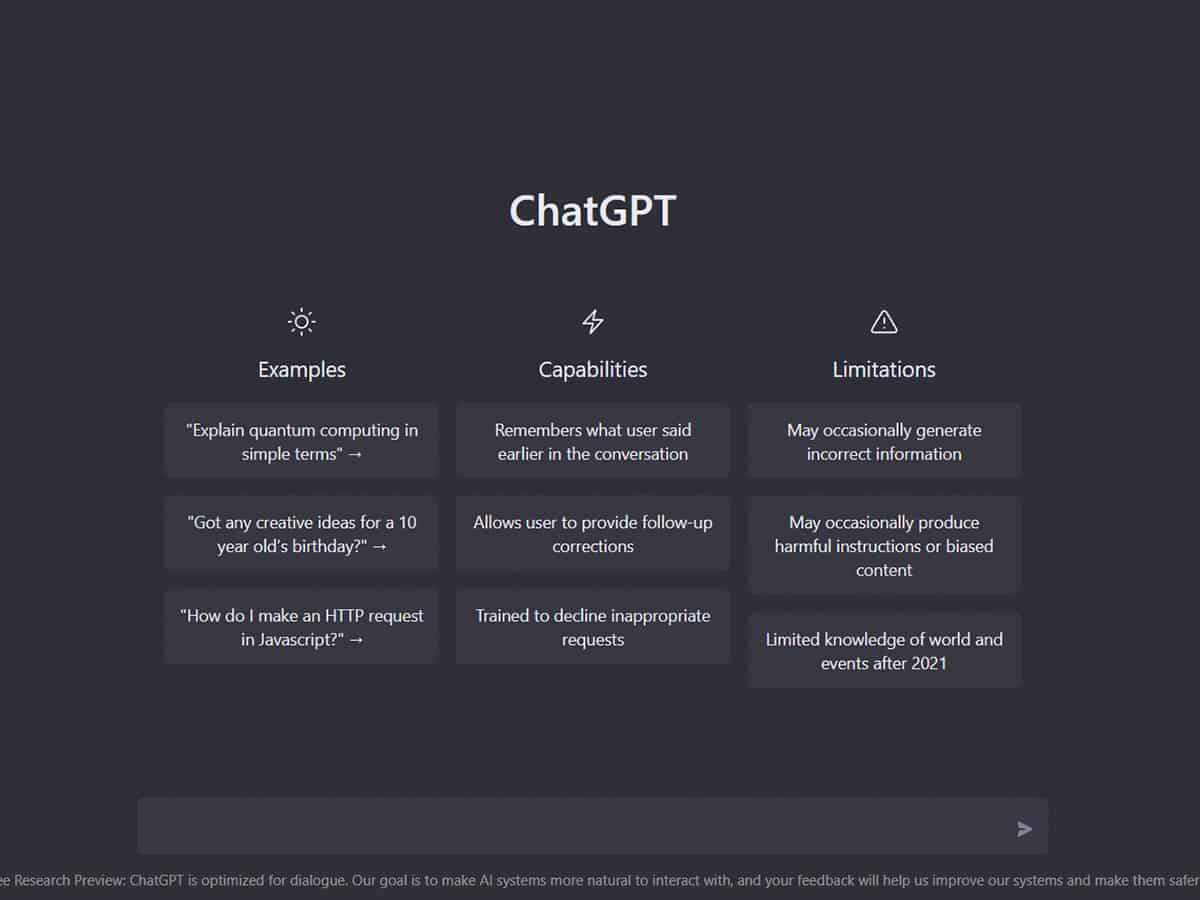

‘ChatGPT’ is an AI chatbot system that OpenAI released last month for the public to ask it countless questions and get answers that are useful.

The researchers said that the expanding role of AI in the cyber world is full of opportunity, but also comes with risks.

“Multiple scripts can be generated easily, with slight variations using different wordings. Complicated attack processes can also be automated as well, using the Learning Management Systems (LLMs) APIs to generate other malicious artifacts,” they wrote.

Defenders and threat hunters should be vigilant and cautious about adopting this technology quickly, otherwise, our community will be one step behind the attackers, said the report.