Concerned over the menace of deepfake technology, which uses AI to create misleading and fake videos, Union minister of electronics, IT and communication Ashwini Vaishnaw on Thursday, November 23, held a meeting with stakeholders, including social media platforms, government officials, and cybersecurity experts to tackle the issue.

The meeting was held to chart out a strategy to combat the challenges posed by deepfake content, targeting individuals and groups. During the meeting, it was decided that the government strategy to tackle the menace of deepfake should have four aspects — detection, prevention, reporting mechanism, and awareness. “The deepfake has emerged as a huge social threat and needs immediate action,” the minister said during the meeting.

“We agreed that we will start drafting the regulation today. And within a very short timeframe, we will have a new set of regulation for deepfakes. The regulations will be drafted in coming weeks,” he added. All stakeholders agreed on the seriousness of the threat and recognised the need to have strict regulations in place. “Deepfake does not come under free speech, it is a threat to the society,” the minister said.

Call for legal remedies

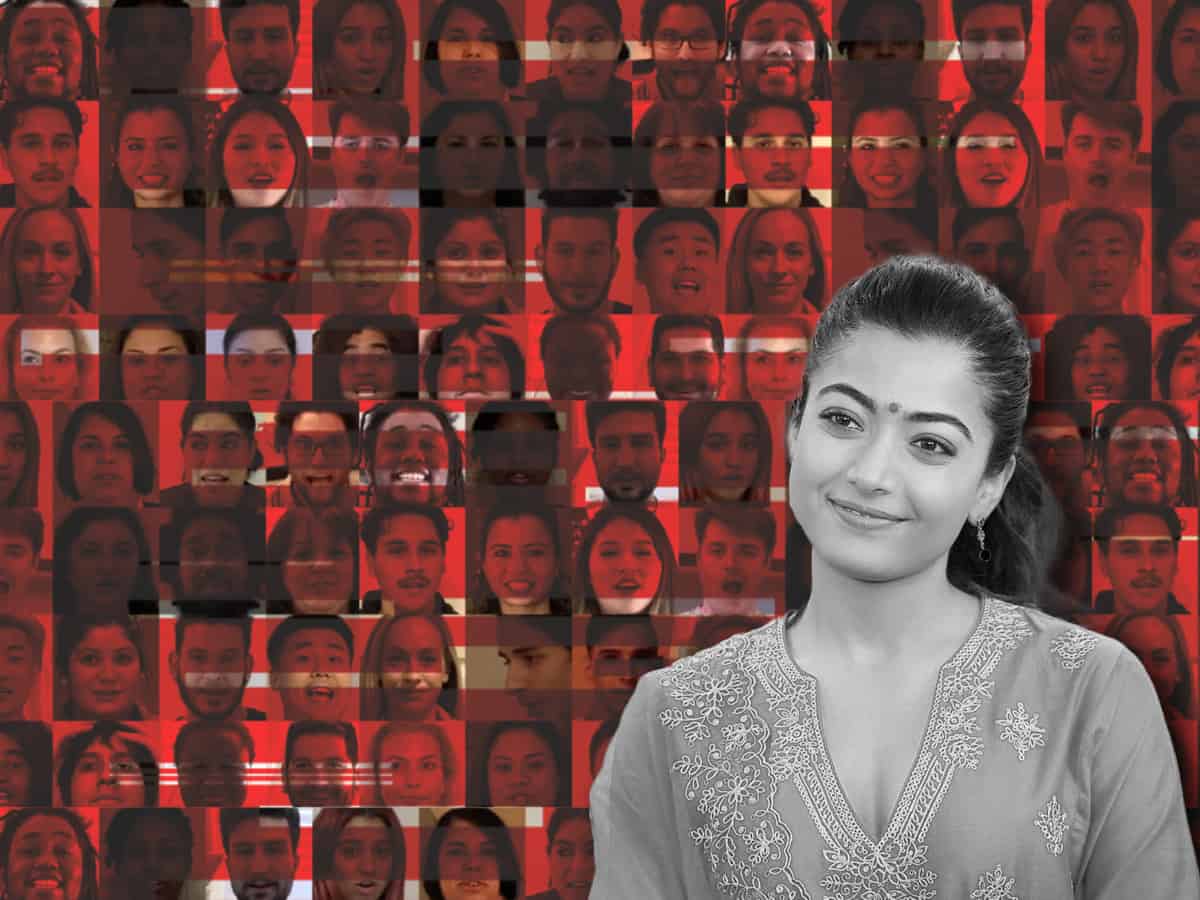

Even though deepfake has been around for some time, it caught the attention of authorities after a fake video of Bollywood actor Rashmika Mandana surfaced on the internet recently. In response, several celebrities expressed deep concern over the menacing technology that could be used to tarnish anybody’s image by using fake and misleading videos created with the help of artificial intelligence.

Besides the actors, Prime Minister Narendra Modi recently emphasised the urgent need to counter the deepfakes. As a result, the Union ministry of electronics and IT swung into action and called meetings with stakeholders to regulate the pervasive technology.

Plan of action

During the meeting on Thursday, Union minister Ashwini Vaishnaw called for an immediate action plan to counter the concerns of deepfake technology. Now, the Centre is set to unveil a clear and actionable plan within the next 10 days, focusing on detection, prevention, reporting mechanism, and awareness. The meeting also discussed labelling and watermarking content.

The meeting was attended by social media companies, NASSCOM, and professors working on artificial intelligence.

What is deepfake?

According to MIT, the term ‘deepfake’ first emerged in late 2017 when a reddit user put pornographic videos on the news and aggregation site. The videos were generated using open-source face-swapping technology.

Deepfake is a kind of synthetic media that uses AI technology to manipulate or generate audio visual content that appears real, often done with malicious intent. Deepfake uses a form of AI called deep learning to make images or videos of fake events. It is usually a blend of real and fabricated media, which threatens the public trust.

Deepfakes are usually convincing pieces of audio and videos of people saying or doing things they have never said or done in real life. It is often created and used with an intention to manipulate people, spread misinformation, and tarnish images of individuals, groups, businesses and governments.

The exponential growth of AI technology has caused a concerning rise in deepfake technology as it produces hyper-realistc pieces of audio visual content with little effort and expenditure.

How to spot a deepfake?

Deepfake technology is one of the most dangerous weapons with cybercriminals. According to MIT researchers, there is no sure shot method to ensure protection against deepfakes. However, there are certain indicators that can help authenticate the veracity of the content you come across on social media.

For example, the widely circulated Rashmika Mandana deepfake video initially displayed a different face before transitioning to Mandanna’s superimposed image over British model Zara Patel’s body.

The deepfake featuring Mandanna’s face became prominent as the individual fully appeared in the frame, revealing discrepancies in lip movements or blinking due to AI limitations in tracking eye and mouth motions accurately.

Advancements in AI technology pose challenges in distinguishing between fake and authentic content, despite potential issues like misaligned movements.